The model trained by standard IMT systems may misinterpret the objects by using unrelated features.

HuTics

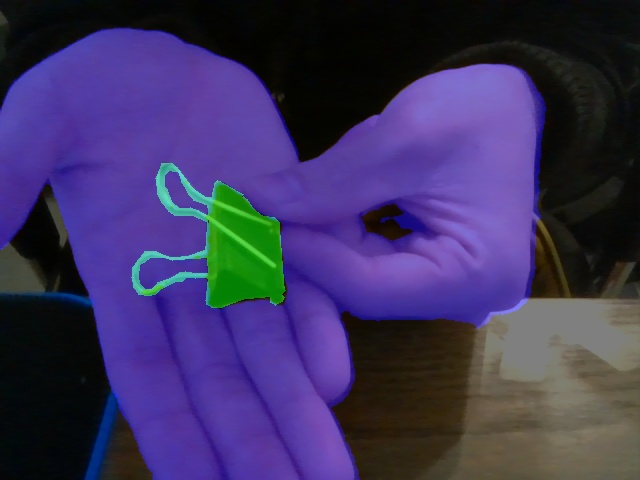

HuTics is a human deictic gestures dataset that includes 2040 images collected from 170 people. It covers four kinds of deictic gestures to objects: exhibiting, pointing, presenting and touching. Note that we only recruited human labelers to annonate the objects of interest, and the human hands (and arms) are predicted using [this project]. Therefore, the hand annotations in the dataset are not always correct.

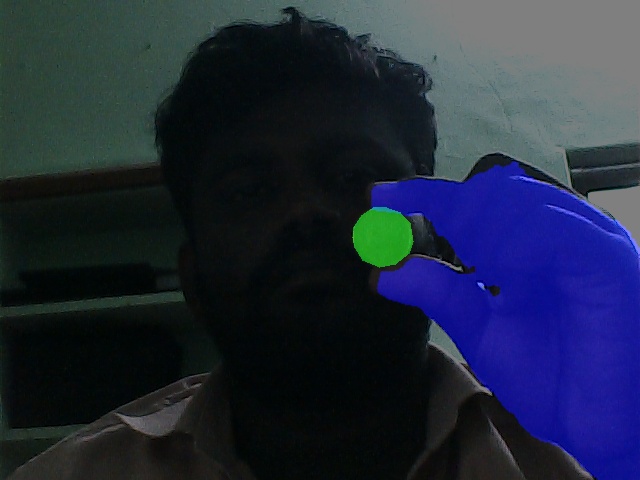

Exhibiting

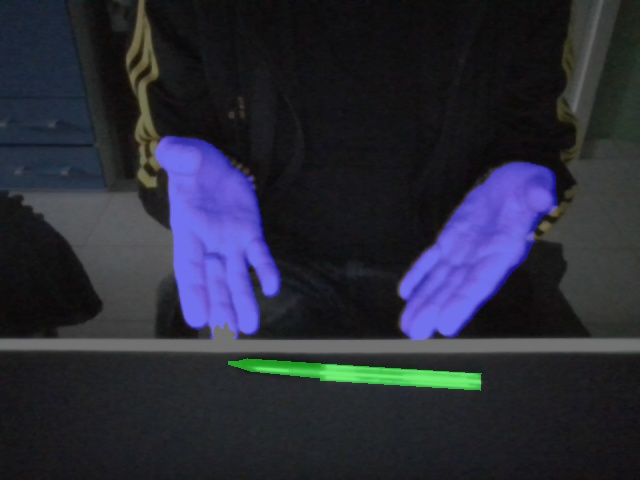

Pointing

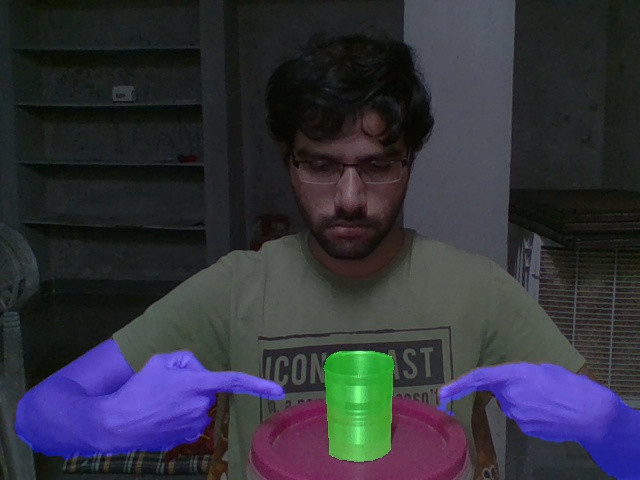

Presenting

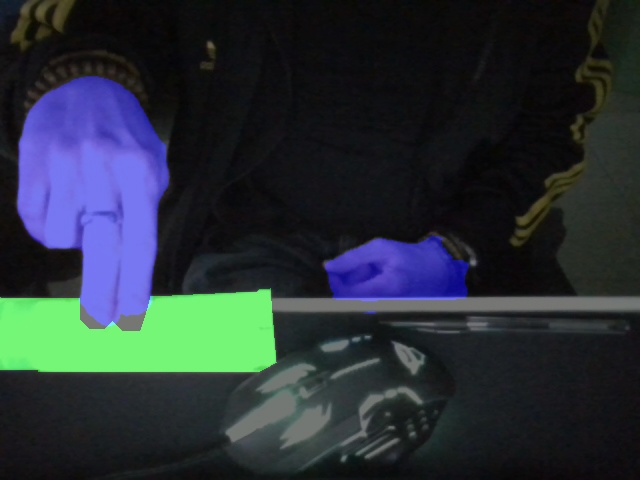

Touching